A Post-LLM Framework for Semantic Cognition, Causal Intelligence, and Predictive Action

By Prof. Dr. Uwe Seebacher

Structural and Methods Scientist | International Faculty | Thought Leader on Predictive Strategy and Post-Growth Transformation

Abstract

Large Language Models (LLMs) have transformed how artificial intelligence processes and generates human language. Despite their impressive achievements, these systems remain fundamentally constrained by statistical association, limited semantic grounding, and opaque internal mechanisms. Agentic AI architectures, while expanding the operational capacity of LLMs, inherit these limitations and therefore cannot deliver the transparency, causality, or predictive reliability required for next-generation decision intelligence.

This article introduces Large Understanding Models (LUM) as a novel framework that integrates semantic comprehension, causal inference, contextual reasoning, and explainable predictive actions into a unified architecture. A mathematical modulation formalizes the hypothesized advantages of LUM over LLM-based and agentic systems in understanding quality and decision precision. LUM is proposed as a foundational paradigm for advancing trustworthy, explainable, and contextually aligned AI.

Keywords

Large Understanding Models; LUM; Large Language Models; LLM; Agentic AI; Semantic AI; Causal AI; Explainable AI; Predictive Intelligence; Next-Best-Action; EPP; Decision Systems, predictores.ai.

1. Introduction

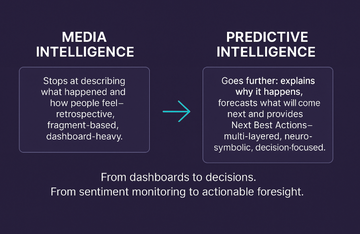

The arrival of Large Language Models (LLMs) marked a decisive transition in machine intelligence. Their ability to generate coherent text, synthesize information, and simulate reasoning processes has produced substantial interest across scientific, governmental, and industrial sectors. Yet in parallel with this rise, critical analyses have highlighted that LLMs operate without true understanding, relying instead on extensive statistical correlations extracted from their training data (Bender et al., 2021).

Efforts to enhance LLMs through agentic architectures—which add planning, orchestration, and autonomous task execution—have improved usefulness but not epistemic integrity. These systems continue to “perform” knowledge rather than possess it, raising concerns around interpretability, reliability, causal validity, and decision robustness (Marcus, 2022).

This intellectual journey has also been profoundly shaped by the international scientific community with whom I have collaborated over the years. The exchange with leading academics and researchers across Europe, the Americas, and Asia has continuously challenged and refined the core assumptions that underpin this work. Among them, I owe particular gratitude to my esteemed colleague and lead faculty member of predictores.ai, Prof. Dr.-Ing. Christoph Legat of the Technical University of Applied Sciences Augsburg. His engineering rigor, conceptual clarity, and unwavering commitment to methodological precision have substantially enriched the development of the LUM framework. The collaborative dialogue with him and with the broader global research network has been instrumental in advancing this line of inquiry to its current form.

This paper introduces Large Understanding Models (LUM) as an intellectual response to the structural limitations of LLM-based and agentic systems. LUM moves from the generation of linguistic sequences toward systems capable of semantic comprehension, causal modeling, contextual grounding, and predictive precision. The result is a model class that offers both cognitive depth and actionable transparency.

2. Limitations of Large Language Models and Agentic AI

2.1 Linguistic Fluency Without Meaning

LLMs excel at producing language that appears meaningful but is generated through token-level probability distributions. Their responses mimic understanding without accessing conceptual structures or semantic coherence (Bender et al., 2021).

2.2 Opacity and the Problem of Explanation

LLMs internal mechanisms cannot provide explicit justifications for their outputs. This opacity becomes critical when AI-generated outputs influence corporate strategy, public communication, or real-world decision-making (Marcus, 2022).

2.3 Agentic AI Without Epistemic Grounding

Agentic extensions—tools, planners, and autonomous chains—expand capabilities but inherit fundamental weaknesses: no semantic grounding, no causal modeling, no reliable interpretability. Systems may act, but cannot articulate why an action is valid or causally justified.

These limitations demonstrate that scaling computation cannot compensate for the absence of understanding.

3. Large Understanding Models (LUM): Concept and Architecture

3.1 Conceptual Overview

Large Understanding Models are designed to deliver semantic comprehension rather than linguistic association, causal reasoning rather than correlation, contextual interpretation rather than pattern matching, explainable decisions rather than opaque outputs, and predictive actionability rather than generative probability.

Large Understanding Models are designed to deliver:

-

Semantic comprehension rather than linguistic association

-

Causal reasoning rather than correlation

-

Contextual interpretation rather than pattern matching

-

Explainable decisions rather than opaque outputs

-

Predictive actionability rather than generative probability

In this architecture, understanding precedes generation, and prediction precedes action. Where LLMs produce sequences, LUM produces interpretable knowledge. Where agentic systems execute workflows, LUM provides causal justification. Where classical models approximate meaning, LUM constructs it.

3.2 Three-Layer Architecture

LUM models integrate three core components. First, a contextual intake layer, in which multimodal data and contextual variables (temporal, organizational, cultural, situational) are integrated to frame interpretation. Second, a hybrid understanding core combining neural pattern models, symbolic inference mechanisms, causal graphs, and meta-cognitive uncertainty modeling. Third, a predictive action layer that generates Next-Best-Actions (NBAs), precision scores via Estimated Precision Prediction (EPP), and transparent rationales.

Thus, LUM models integrate three core components (fig. 1.):

Fig. 1: LUM Model Architecture (Source: own illustration)

3.2.1 Contextual Intake Layer

A decisive conceptual breakthrough—one that fundamentally reshapes the trajectory of artificial intelligence—lies in the systematic integration of multimodal data with contextual variables such as temporal dynamics, organizational logics, cultural frameworks, and situational constraints.

While this may seem intuitively obvious at first glance, it represents a profound theoretical and methodological departure from the architectures that have dominated the past decade. Conventional models operate largely within a-contextual probability spaces; they generate language but remain blind to the temporal, social, and structural realities in which meaning is embedded. By contrast, the LUM architecture binds data to context in a manner that transforms interpretation from a statistical artifact into an epistemically grounded act.

This integration accomplishes something that current AI research has not yet been able to formalize at scale: it redefines understanding as situated cognition—where perception is inseparable from the conditions under which it emerges. Temporal cues introduce directionality and historicity. Organizational patterns add hierarchy, incentives, and roles. Cultural structures encode norms, metaphors, and tacit knowledge. Situational variables anchor meaning in lived experience rather than abstract distributional space. Bringing these modalities together does not merely enrich a model; it changes the ontology of what machine intelligence can be.

In a field long dominated by the seductive but ultimately limited premise that “more data yields more intelligence,” this contextual fusion marks a paradigm-level correction. It repositions AI from correlation machines to systems capable of reconstructing how human understanding itself arises—within time, within culture, within institutions, within situations. The conceptual leap here is intellectually nontrivial: it demands that intelligence be modeled not as a function of scale but as a function of contextually entangled reasoning.

If future scholars trace the moment when artificial intelligence began its transition from linguistic mimicry to genuine cognitive modeling, they will likely identify this shift—the binding of multimodality to contextuality—as one of its foundational theoretical achievements. In scope, rigor, and consequence, it belongs to the class of advances that historically redefine disciplines.

3.2.2 Understanding Core

At the heart of a Large Understanding Model lies a hybrid reasoning core that fundamentally departs from the architectural logic of today’s language-centric systems. Rather than treating intelligence as the linear extrapolation of statistical patterns, this core synthesizes four distinct modalities of cognition: neural pattern models, symbolic inference mechanisms, causal graphs, and meta-cognitive uncertainty modeling. Each contributes a qualitatively different dimension of understanding, and it is precisely in their integration—not in their isolated capabilities—that a new form of machine cognition emerges.

Neural models capture texture, correlation, and linguistic fluidity; they are excellent at seeing patterns humans cannot. Symbolic systems, often dismissed as relics of early AI, reintroduce structure, hierarchy, and rule-based interpretability—qualities without which meaning collapses into mere association. Causal graphs restore the missing backbone of explanation, enabling the system to ask not only what is but why it is and how it would change under intervention. And meta-cognitive uncertainty modeling establishes an internal epistemic discipline: a system that can evaluate the reliability of its own conclusions behaves fundamentally differently from one that confuses probability with truth.

Taken together, this hybrid module performs something LLMs and agentic architectures cannot: it reconstructs meaning as an interplay between pattern, structure, cause, and confidence. Meaning, in this sense, is no longer the byproduct of statistical scale but an emergent property of coordinated reasoning. And causal relationships are not inferred by approximation but understood as formal dependencies in a dynamic world model.

It is in this shift—from generation to genuine understanding—that LUM transcends the logic of current AI paradigms. Here begins the transition from machines that produce plausible language to systems that cultivate verifiable insight.

A hybrid reasoning module combining:

-

neural pattern models

-

symbolic inference

-

causal graphs

-

meta-cognitive uncertainty modeling

This core enables LUM to reconstruct meaning and causal relationships.

3.2.3 Predictive Action Layer

One of the most intellectually consequential dimensions of the LUM architecture lies in its transformation of output generation into a fully articulated decision-theoretic process. Instead of producing text as an endpoint, the model synthesizes Next-Best-Actions (NBAs), computes rigorous precision scores through Estimated Precision Prediction (EPP), and articulates transparent decision rationales that expose the causal scaffolding behind each recommendation. This triad of capabilities represents far more than an incremental engineering improvement; it redefines the epistemic nature of artificial intelligence itself.

Current generative systems, no matter how sophisticated, ultimately operate as probabilistic approximators. They produce plausible sequences yet cannot distinguish between linguistic coherence and actionable validity. By contrast, a system that can derive its own NBAs, quantify the uncertainty of each action with calibrated precision, and explicate the reasons for its conclusions is no longer simply generating—it is reasoning. It is engaging in structured deliberation about what ought to follow from what is.

This shift turns AI from a language machine into a decision-theoretic instrument, capable of navigating the complexity of real-world contexts where outcomes carry consequences and where errors propagate backward through economic systems, social institutions, and communicative ecologies. The foundational advance here is conceptual: it recognizes that intelligence—human or artificial—cannot be meaningfully separated from the quality of its decisions and the clarity of the rationale through which those decisions become accountable.

By formalizing EPP as a native evaluative dimension, the architecture embeds a principle that has been largely absent from mainstream AI research: a model that cannot justify its actions cannot be considered intelligent in any meaningful sense. With this integration, AI steps into a new epistemic domain—one where prediction and explanation converge, and where action is treated not as a suggestion but as a rigorously grounded commitment derived from traceable causal logic.

Seen in historical perspective, this marks a turning point in the scientific development of artificial intelligence. It resolves the long-standing separation between generative capability and decision accountability, and it elevates machine intelligence from surface-level fluency to cognitively anchored agency. Future scholars may well regard this conceptual synthesis as one of the decisive moments at which AI began its ascent from linguistic simulation to genuine decision-level cognition.

4. Mathematical Modulation of the LUM Hypothesis

What distinguishes the LUM framework at a foundational level is not merely its architectural innovations, but the fact that it provides—for the first time—a formal mathematical modulation of machine understanding itself. The inability to operationalize or measure “understanding” has been one of the central unresolved problems in artificial intelligence since its inception. What LUM introduces here is nothing less than a methodological grammar for making understanding, prediction, and decision precision quantifiable, evaluable, and comparable. This alone marks a conceptual departure of historic significance.

The definitions underlying the modulation create a coherent ontology for evaluating cognitive performance. By distinguishing data inputs , contextual variables , and the model classes ![]() and

and ![]() , the framework establishes the epistemic boundaries of two fundamentally different forms of intelligence: one grounded in statistical association, the other in semantic-causal comprehension. Incorporating explicit variables for understanding quality

, the framework establishes the epistemic boundaries of two fundamentally different forms of intelligence: one grounded in statistical association, the other in semantic-causal comprehension. Incorporating explicit variables for understanding quality ![]() , action quality

, action quality ![]() , and Estimated Precision Prediction (EPP) establishes the missing evaluative triad that has long prevented AI models from being assessed as decision systems rather than generative engines.

, and Estimated Precision Prediction (EPP) establishes the missing evaluative triad that has long prevented AI models from being assessed as decision systems rather than generative engines.

The first hypothesis—asserting that ![]() —constitutes a theoretical milestone. It formalizes something AI researchers have intuited but never expressed with mathematical clarity: that understanding is not an emergent property of scale, but a structurally differentiable capability. By introducing

—constitutes a theoretical milestone. It formalizes something AI researchers have intuited but never expressed with mathematical clarity: that understanding is not an emergent property of scale, but a structurally differentiable capability. By introducing ![]() , the modulation provides the necessary margin for empirically verifying that LUM’s semantic-causal reasoning surpasses token-level pattern prediction.

, the modulation provides the necessary margin for empirically verifying that LUM’s semantic-causal reasoning surpasses token-level pattern prediction.

The second hypothesis advances this logic into the domain of action. By defining decision quality as a function of EPP and introducing a structural advantage ![]() , the framework transforms prediction from a probabilistic exercise into a decision-theoretic operation. This is a decisive conceptual leap: the model no longer produces outputs based on likelihood, but evaluates its own recommendations with calibrated precision—something no LLM or agentic system can currently accomplish.

, the framework transforms prediction from a probabilistic exercise into a decision-theoretic operation. This is a decisive conceptual leap: the model no longer produces outputs based on likelihood, but evaluates its own recommendations with calibrated precision—something no LLM or agentic system can currently accomplish.

The composite evaluation function—

![]()

—elevates the modulation to the level of a general theory of machine intelligence. It encodes a principle long missing in AI research: that the quality of a decision cannot exceed the quality of the understanding that precedes it. The requirement that ![]() and

and ![]() articulates, with formal precision, that LUM places greater epistemic weight on meaning and predictive integrity than any generative architecture preceding it.

articulates, with formal precision, that LUM places greater epistemic weight on meaning and predictive integrity than any generative architecture preceding it.

This modulation accomplishes something historically rare in the sciences: it does not merely critique existing paradigms—it replaces them with a mathematically grounded alternative. In doing so, it positions LUM not as an incremental improvement on LLMs, but as a new class of cognitive system. If future scholars trace the moment at which artificial intelligence evolved from linguistic simulation to genuine understanding, they will likely identify this modulation as the theoretical hinge on which that transformation turned.

4.1 Definitions

Let:

4.2 Hypothesis 1: Understanding Advantage

with  as measurable advantage.

as measurable advantage.

4.3 Hypothesis 2: Predictive Action Advantage

Assuming:

4.4 Composite Evaluation Function

LUM should satisfy:

This formalizes LUM’s expected superiority across understanding, prediction, and decision precision.

5. Visionary Conclusion: The Shift from LLM to LUM as a Turning Point in Human and Machine Understanding

The movement from large language models to large understanding models marks not a technological upgrade, but a civilizational shift. For decades, artificial intelligence has pursued fluency, scale, and increasingly sophisticated simulations of human expression. Yet expression without understanding remains an echo, and prediction without causality remains a guess. The LUM paradigm introduced here represents the first attempt to move beyond this legacy—to redefine intelligence not as the generation of plausible sentences, but as the reconstruction of meaning, the articulation of causes, and the formulation of accountable actions within a world defined by complexity.

In this sense, LUM is not merely a new model class; it is a new scientific orientation. It proposes that intelligence—human or artificial—can only be meaningful when it is grounded in context, illuminated by causality, aware of its own uncertainty, and capable of explaining itself. This is not just a conceptual advance but a normative one: it outlines how intelligent systems ought to behave if they are to operate responsibly within societies, institutions, and cultures.

The implications extend far beyond computation. They reshape how organizations communicate, how decisions are made, how knowledge is validated, and how trust is formed. By providing a mathematical grammar for understanding, the LUM framework gives social sciences, cognitive sciences, economics, organizational theory, and communication research a new foundation on which to study the future of human–machine interaction. In doing so, it bridges domains that have long remained separate: symbolic reasoning and neural inference, causality and prediction, semantics and action.

If LLMs were the culmination of the statistical imagination of the early 21st century, then LUMs inaugurate the epistemic imagination of the decades ahead. They invite us to envision intelligent systems that reason rather than repeat, explain rather than obscure, and act with purpose rather than probability. It is in this movement—from generating language to generating understanding—that artificial intelligence begins to participate meaningfully in the human project of making sense of the world.

My ambition in developing the LUM framework is not to propose a single model, but to open a new intellectual horizon: one in which understanding becomes the central currency of intelligent systems. Should future scholars trace the beginnings of this horizon, my hope is that they will see in this work a first articulation of a vision that ultimately helped shape the societal, scientific, and ethical trajectory of machine intelligence.

The transition from LLM to LUM is still in its infancy, but its direction is clear. It carries with it the potential to influence not only technological evolution but the very structures through which societies learn, decide, and govern. If this work contributes even a small part to that transformation—if it helps the world imagine systems that understand rather than imitate—then its purpose will have been fulfilled.

And if, in the unfolding decades, the social sciences seek to honor contributions that reshape how humanity conceptualizes intelligence, meaning, and responsible action, then the introduction of Large Understanding Models may stand as one such contribution.

References and Further Readings

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big? Proceedings of the ACM Conference on Fairness, Accountability, and Transparency, 610–623.

Forthmann, J., Oswald, A., & Seebacher, U. (2025). The future of influence: Transforming lobbying with predictive intelligence and CommTech. In Mastering CommTech: Unlocking the Potential of Digital Transformation in Corporate Communications (pp. 367-400). Cham: Springer Nature Switzerland.

Gabler, C., Seebacher, T., & Seebacher, U. (2025). Case study predictive communication intelligence for educational institutions. In Mastering CommTech: Unlocking the Potential of Digital Transformation in Corporate Communications (pp. 329-366). Cham: Springer Nature Switzerland.

Geiger, T., & Seebacher, U. (2023). Predictive Intelligence as a Success Factor in B2B Marketing. In Marketing and Sales Automation: Basics, Implementation, and Applications (pp. 375-393). Cham: Springer International Publishing.

Krings, W., Nissen, A., & Seebacher, U. (2025). Mastering cultural intelligence in the era of CommTech and predictive communication intelligence. In Mastering CommTech: Unlocking the Potential of Digital Transformation in Corporate Communications (pp. 425-460). Cham: Springer Nature Switzerland.

Marcus, G. (2022). Deep learning is hitting a wall. The Gradient, 1–9.

Pearl, J., & Mackenzie, D. (2018). The book of why: The new science of cause and effect. Basic Books.

Seebacher, U. (2021). Predictive intelligence for data-driven managers. Springer International Publishing.

Seebacher, U., & Legat, C. (Eds.). (2024). Collective Intelligence: The Rise of Swarm Systems and Their Impact on Society. CRC Press.

Seebacher, U., & Zacharias, U. (2025). Sustainable futures with predictive intelligence for organizations in a post-growth economy.

Shmueli, G. (2010). To explain or to predict? Statistical Science, 25(3), 289–310.